Welcome to the realm of data lakes, where structured, semi-structured, and unstructured data come together in a unified storage system. Imagine a data repository that offers unlimited scalability and the ability to store any type of file, just like the file systems on your computer. In this blog post, we will explore the concept of data lakes, understand their underlying principles, and discover the game-changing technologies that make them possible.

The Three Principles of a Data Lake

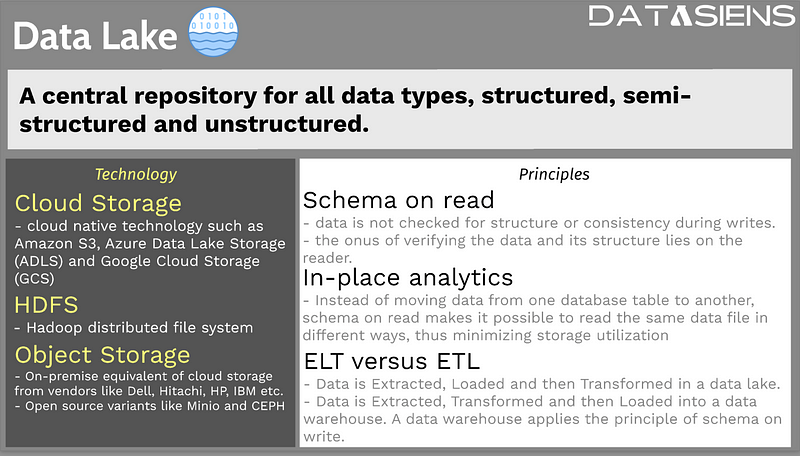

A data lake is more than just a storage system; it follows three fundamental principles that shape its architecture and functionality. Let's dive deeper into these principles and understand their significance.

1. Schema on Read: A Paradigm Shift in Data Storage

Data lakes embrace the concept of schema on read, which means that data is not validated or structured during the write process. Instead, the responsibility of verifying the data's structure lies with the reader. This approach allows for easy and flexible data ingestion, encouraging applications and users to write any data to the data lake. However, it's important to note that without proper governance, the data lake can become cluttered and challenging to navigate. We'll explore data governance in detail to help you maintain a clean and organized data lake.

2. In-Place Analytics: Harnessing the Power of Schema on Read

Schema on read enables a fascinating capability known as in-place analytics. Unlike traditional databases that require data to be moved to different tables for analysis, schema on read allows data to be read in various ways from the same data file. This eliminates the need for making multiple copies of the dataset, resulting in significant storage cost savings and reduced data duplication. For example, a data scientist and an analyst can create different views of the same dataset tailored to their specific use cases without duplicating the underlying data.

3. ELT vs. ETL: Understanding Data Transformation in Data Lakes

In the world of data pipelines, it's crucial to distinguish between ELT (Extract, Load, Transform) and ETL (Extract, Transform, Load) approaches. In a data lake, data is first extracted and loaded, and then transformed based on specific user requirements and use cases. This order allows for the flexibility of schema on read. In contrast, in a data warehouse, data is extracted and transformed before being loaded because the data warehouse enforces a predefined structure or schema during the write process. Understanding the distinction between ELT and ETL is key to unlocking the true potential of data lakes and data warehousing.

Implementing Data Lakes: Cloud Storage and Beyond

Now that we grasp the fundamental principles of data lakes, let's explore the technologies used to implement them.

Cloud Storage

Cloud storage, offered by major cloud vendors such as Amazon, Microsoft, and Google, is the most popular choice for building data lakes. These cloud storage services provide unlimited scalability, eliminating the need for upfront hardware investments. Data lakes built on cloud storage enable organizations to store terabytes or even petabytes of data without worrying about hardware constraints.

Amazon Simple Storage Service (S3) was the first cloud storage offering to hit the market and remains the most popular choice for building data lakes. Microsoft Azure Data Lake Storage (ADLS) and Google Cloud Storage (GCS) also offer robust and widely-used solutions in this space. Other vendors like DigitalOcean and Alibaba also provide similar cloud storage technologies.

One of the key advantages of cloud storage is its flexibility and pay-as-you-go pricing model. Organizations only pay for the storage they use, allowing them to scale storage capacity up or down as needed without the need to invest in additional hardware. This scalability and cost-effectiveness make cloud storage an ideal choice for implementing data lakes.

Cloud storage services also provide robust security mechanisms to protect data and prevent unauthorized access. Encryption, access controls, and monitoring tools are built into these services to ensure data confidentiality and integrity. Compliance with industry regulations and data governance practices is also facilitated through these security features.

Whether you choose Amazon S3, Azure Data Lake Storage, or Google Cloud Storage, the underlying principles and benefits of cloud storage for data lakes remain consistent. These technologies offer unlimited scalability, cost-effectiveness, robust security, and seamless integration with other cloud services.

For customers with higher data protection requirements, the Hadoop distributed file system (HDFS) has been a preferred choice. HDFS, an open-source distributed computing framework, allows organizations to build highly reliable data storage systems using commodity hardware. Object storage solutions offered by vendors like HP, IBM, Hitachi, and NetApp provide dense and fast disks, enabling scalable storage independent of compute power. Building your own object storage using software like Minio or Ceph offers even more flexibility, reducing vendor lock-in concerns.

Bringing It All Together: Data Lakes in Action

Data lakes serve as a versatile platform for data scientists, offering seamless integration into their workflows. Whether it's training models, making predictions, or storing output files, data lakes empower data scientists to leverage cloud storage directly within their data science platforms. Modern approaches also include feature stores for managing ML features and vector databases for AI applications. In this context, we'll explore a practical example of uploading data to a cloud storage bucket and reading that dataset into a Pandas dataframe using a Python library called Wrangler.

import awswrangler as wr

import pandas as pd

# Upload the data to S3

df = pd.DataFrame({'Name': ['John Doe', 'Jane Doe', 'Peter Smith'], 'Age': [30, 25, 40]})

wr.s3.put_object(bucket='my-bucket', key='my-file.csv', data=df.to_csv())

# Read the data from S3

df = wr.s3.read_csv(bucket='my-bucket', key='my-file.csv')

# Print the data

print(df)

This example highlights the seamless integration between cloud storage and data science workflows. Once the data is in the pandas data frame, it can be used in a variety of ways, from training models to making predictions.

Conclusion: Data Lakes are here to stay

Data lakes have revolutionized data storage and analytics, offering limitless scalability, schema on read, and in-place analytics. They have become a game-changer for modern enterprises, enabling organizations to leverage structured, semi-structured, and unstructured data in a unified and flexible environment. Whether you opt for cloud storage or implement your own data lake solution, the principles and technologies behind data lakes open up a world of possibilities for data-driven insights and innovation.

In the next blog post, I will describe a newer and upcoming technology called the Data Lakehouse. We'll also explore how modern architectures like data fabric and data mesh are reshaping the data landscape, along with emerging technologies like streaming data architectures for real-time processing.